The objective here is to share a Robust, Consistent logging solution for Mule Cloudhub apps that are easy to maintain, visualize and track down software bugs or production issues quickly.

Logging is a powerful aid for understanding and debugging programs run-time behavior. Logs capture and persist important data and make it available for analysis at any point in time.

This article deals with creating a logging framework for MuleSoft apps that will suit any enterprise which is concerned about consistent and structured logging, zero log message loss and log analyzing.

Logging in CloudHub:

We don’t need to be concerned with the logging configuration inside Mule apps when we choose to deploy them to CloudHub. CloudHub writes all the logs to the console, provides a way to search by keyword and by the level and gives us an ability to change applications root log level on the fly, and to switch to debug mode and such.

So, why not use CloudHub’s default logging then?

Whenever Mule apps are deployed to CloudHub, logging capabilities can quickly become very limited in terms of visibility, persistence etc. especially when you have large amounts of logs that are generated by applications. Here are some things that you have to keep in mind before considering default CloudHub logging as your long-term thing.

- Persists logs only up to 100 MB or 30 days whichever comes first.

- After you delete applications, you lose all logs of that application.

- The only way to see logs is by logging into Anypoint platform and see CloudHub console per application.

- Cannot ship logs to your desired system easily, they can only be accessed via the REST API.

- No single place to analyze all your applications logs especially if you have API-led architecture. This can help to take some preventive measures.

So…

- We need a logging framework that can generate logs in a consistent and structured manner.

- Use any external log analyzer like ELK and feed all the logs to it to analyze your data in the most efficient way.

First things first.

- Raise a support ticket with MuleSoft to disable CloudHub logging. This is the setting that MuleSoft team has to do to in order for you to override the default CloudHub log4j2 configuration with your own custom application configuration.

- Setup Elastic stack(E.L.K) to analyze log data. Two things to consider here.

- As those three products are open source, you can use this docker image to set up your own environment and maintain all the configurations, deployments, disaster recovery, security, backup, etc.

- There is a company called elastic.co which takes care of all the pain in managing instances, disaster recovery, backup, etc. and they provide a way to set up stack on-premise or on their managed cloud.

Note: When you enable custom logging in CloudHub, You can change the application's loggers log level on the fly via CloudHub API. But you cannot see a place in UI to do so. Hope it get fixed soon.

Design a Custom logger for your Mule applications:

The default Mule logger is simple to use for logging but can be too simple because it doesn’t enforce any standards or best practices. It also doesn’t provide a way to generate structured or consistent log messages. Designing a custom logger is the best solution here to generate structured logs and to enforce standards on the log data to be generated and of course, for seamless developer usage. The idea of this module should be to generate consistently structured logs in JSON format.

It would be very good to provide a mule custom module for a developer to use for logging, requires specific information, and also be extended with additional information as key-value pairs, to log at a certain point, in a neat GUI. Then it’s the custom module’s job to take all the information, convert that data to JSON and log.

Here is the look of Mule Custom Logger developed by AVIO to use for any log purposes.

And this module will produce a JSON like this

|

This logger has been open-sourced under BSD 2-Clause “Simplified” License in GitHub. You can find it here.

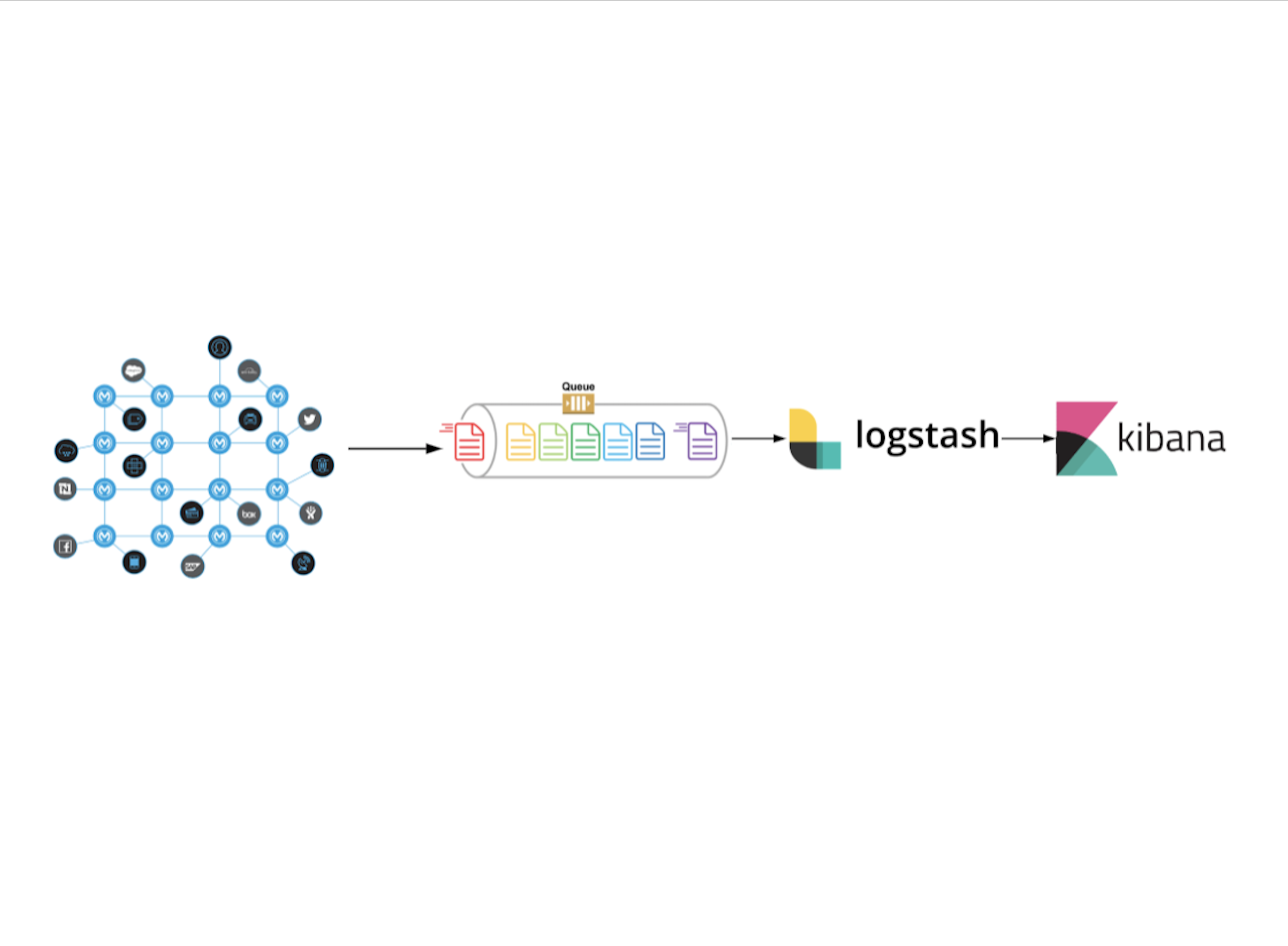

We now have to feed this JSON to the ELK stack where Logstash will read this JSON message, parse it and ships to elasticsearch. Here is the high-level overview of ELK Stack. Now we have a great UI and a mechanism to generate logs in a consistent structured manner. Also if we ever need to change the implementation of how and where we are logging to, the custom module can be updated and each project simply needs to update to the latest version of the module.

When you disabled CloudHub logging for an application, There are multiple ways to ship logs from CloudHub to any external system(Logstash in our case). Here are some.

- Use Log4j2 appenders like socket appender to post all logs to a specified destination.

- Use MuleSoft provided CloudHub API to retrieve logs periodically and send to your destination.

-

[Recommended]: Use a log4j2 appender to push all your CloudHub logs to Amazon SQS queue and have Logstash read messages from it. This way, we can scale SQS queue for high availability when there is huge log flow and we can ensure zero log message loss from CloudHub to Logstash as there is a highly scalable queue in between. Here is the code for the custom log4j2 appender to push all application logs to AWS SQS queue.

MuleSoft Health Check

Can MuleSoft Health Check Accelerate Your Digital Evolution?

{{cta(’48d25112-e97f-4e5e-bb34-daba7a364f4a’)}}

Logstash Pipeline:

Considering that you have Logstash setup, you can write pipelines in Logstash to read messages from the queue, parse them and send to elasticsearch.

Here is an example of Logstash pipeline that reads a message from AWS SQS queue, parses each message and send to elastic to store.

input {

sqs {

id => "mule-sqs"

access_key_id => "<<aws-access-key>>"

secret_access_key => "<<aws-secret>>"

id_field => "sqs.id"

md5_field => "sqs.md5"

sent_timestamp_field => "sqs.sent_time"

polling_frequency => 5

queue => "<<sqs-queue-name>>"

region => "<<aws-region>>"

codec => "plain"

}

}

filter {

grok {

match => {

message => "%{WORD}%{SPACE}%{TIMESTAMP_ISO8601:logdate}s[%{GREEDYDATA:thread}]%{SPACE}%{GREEDYDATA}: %{GREEDYDATA:message}"

}

overwrite => ["message"]

}

date {

match => ["logdate", "yyyy-MM-dd HH:mm:ss,SSS", "ISO8601"]

}

json {

source => "message"

}

}

output {

elasticsearch {

hosts => "<<elastic-host>>"

ssl => true

manage_template => false

index => "mulesoft-%{+YYYY.MM.dd}"

user => "<<elastic-username>>"

password => "<<elastic-password>>"

codec => "plain"

}

}Here is AVIO Consulting’s log4j2 custom log appender to ship all the application logs to a specified Amazon SQS queue. You can find this custom appender here. It is open-sourced as well under BSD 2-Clause “Simplified” License.

This Logstash pipeline has 3 parts.

- Input{}: This has some configuration to read a message from the specified AWS SQS queue.

- Filter{}: This takes each log message from SQS queue, reads and parses it as JSON, creates appropriate fields based on the incoming JSON.

- Output{}: This has the configuration to send all parsed messages to a specified destination. It should be the elastic endpoint in this case.

As Logstash reads and parses the log data, elastic on the other hand stores all the data with the appropriate fields as we see below in Kibana.

In the above picture, you are seeing details about one log message in Kibana dashboard that is generated by our custom logger. You can also see all the JSON fields from the log message on the left pane.

Note that the Logstash pipeline is reading the entire JSON log message from SQS queue and creates corresponding fields to each of the key-value pairs out of that incoming JSON. When you have a dedicated field in elastic for each of the data points you send from your mule app, it is incredibly easy to create stunning dashboards and charts in Kibana.

Now you can use Kibana to create charts and dashboards out of the data point fields in elastic for much better visualization of log messages. You can also configure your elastic instance to persist logs for an extended period of time as well as provide rollups and archiving options, things that aren’t possible in standard Cloudhub logging.

We have now streamlined the generation of consistently structured log data from the Mule application and transported it to a highly available AWS SQS queue, where it was read and parsed by Logstash, and then sent to elasticsearch with all the fields for you to play with inside Kibana.

{{cta(‘9cb7c1f5-10c2-451f-9e49-61c239a93854′,’justifycenter’)}}